The Rise of MCP Servers: Gaining the Edge in the Era of Agentic Automation

The era of building custom, brittle integrations for every new AI model is over. Model Context Protocol (MCP) servers are emerging as the universal standard—a "USB-C port" for AI—allowing Large Language Models (LLMs) to plug into your data and tools instantly. Adopting this standard is no longer just a technical decision; it is a strategic advantage for faster product development and robust automation.

Shrikant Shinde

9/25/20254 min read

Why it matters:

Standardization: Replaces complex, custom API glue code with a single protocol.

Portability: Switch AI models (Claude, GPT-4, Gemini) without rewriting your tool integrations.

Agency: Empowers AI to actually do things (execute code, query databases) rather than just talk.

Introduction: From Chatbots to Agents

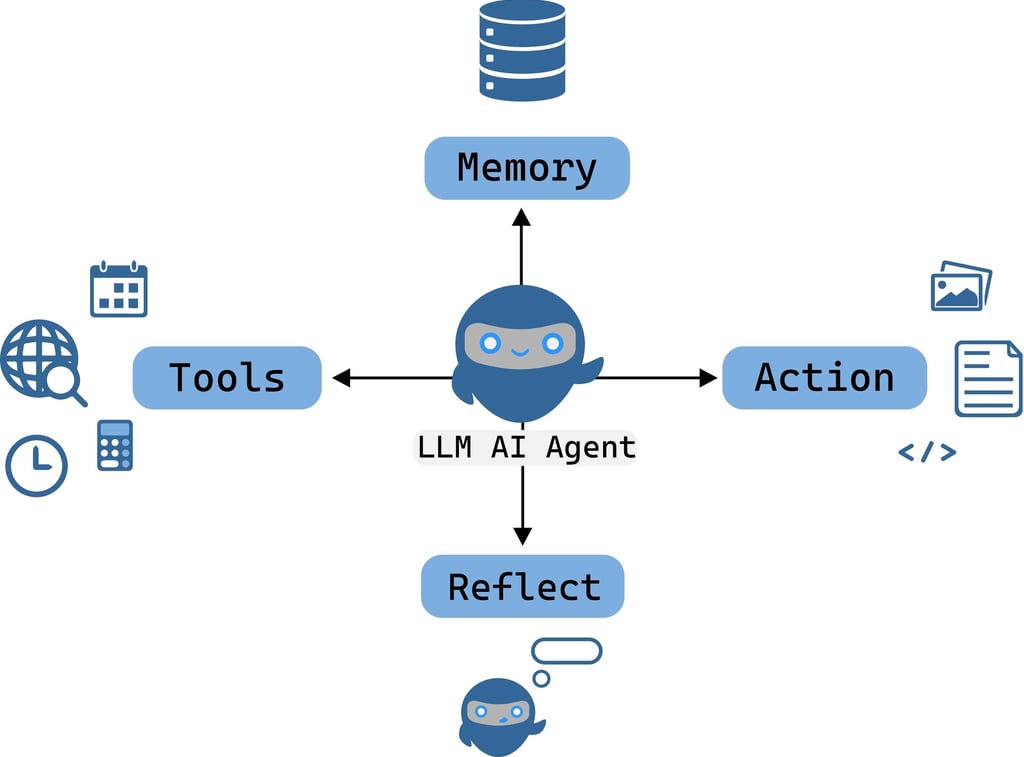

We are witnessing a pivotal shift in Artificial Intelligence. We are moving from "Chatbots" (systems that talk to you) to "Agents" (systems that work for you).

A chatbot can tell you how to write a SQL query. An agent can connect to your database, run the query, analyze the results, and email you a report.

The bottleneck for this transition has been connectivity. How do you safely, quickly, and reliably give an LLM access to your internal GitHub, your SQL database, or your Slack channels?

Enter the MCP Server.

1. What are MCP Servers? (The USB-C Analogy)

To understand MCP (Model Context Protocol), imagine the world before USB. If you wanted to connect a printer, a mouse, and a hard drive to your computer, you needed three different, clunky cables (Parallel port, PS/2, Serial port). It was a mess.

MCP is the USB-C for Artificial Intelligence.

The Host (Computer): This is the AI application (like Claude Desktop, Cursor, or your own AI app).

The MCP Server (Peripheral): This is a lightweight program that sits on top of your data or tool (like a Google Drive connector or a Postgres database connector).

The Protocol (The Cable): MCP is the standardized language they use to talk.

Because of this standard, you don't need to teach the AI how to talk to your specific database. You just "plug in" the MCP server, and the AI immediately knows: "Ah, I see a database here. I can list tables and run queries."

2. The Fuel for Agentic Automation

Why is this specific technology driving the rise of agents? Because agents require three things that raw LLMs lack: Context, Action, and Safety.

A. Context is King

An LLM is a genius locked in an empty room. It knows everything about the world up to its training cutoff, but it knows nothing about your business. An MCP server acts as a window, giving the LLM a live view of your internal documentation, recent code commits, or customer logs without you needing to copy-paste gigabytes of text.

B. From Text to Action

Standard APIs are designed for humans or rigid code. MCP servers are designed for LLMs. They expose "Tools" (executable functions) that the AI can understand and trigger.

Without MCP: You ask, "Check the server status." The AI says, "I cannot do that."

With MCP: You ask, "Check the server status." The AI triggers the check_health tool on your Ops MCP server and reports, "The server is healthy, uptime 99.9%."

C. Safety by Design

Connecting an AI to your database sounds scary. MCP servers solve this by acting as a controlled gateway. You don't give the AI root access; you give it an MCP server that only exposes specific, safe read-only commands.

Shutterstock

3. The "Edge" in Product Development

Why should a product leader or engineering manager care about MCP? Because it solves the "m x n" problem.

In the past, if you used 3 different AI models (OpenAI, Anthropic, Mistral) and wanted them to connect to 3 different internal tools (Jira, Linear, Slack), you had to write 9 different integrations.

With MCP, you build 3 MCP servers (one for each tool). Now, any MCP-compliant AI model can connect to them instantly.

Strategic Benefits:

Future-Proofing: When the next "killer" AI model releases next week, you don't need to rebuild your backend. You just point the new model at your existing MCP servers.

Modular Development: Your backend teams can build "Data Connectors" (MCP Servers) independently of the frontend AI teams.

Ecosystem Leverage: The open-source community is already building MCP servers for popular tools (Postgres, Google Drive, Git). You can download these off the shelf instead of building them from scratch.

4. Best Practices: Building Your MCP Servers

If you decide to build an MCP server to give your AI agents an edge, follow these guidelines to ensure security and reliability.

The DOs (Green Flags) ✅

DO Keep It Simple: Follow the "Single Responsibility Principle." Build one MCP server for your Database and a separate one for your File System. Don't create a monolithic "God Server."

DO Use Standardized Logging: Agents can't "see" errors like humans can. Use robust logging (to stderr) so you can debug why an agent failed to execute a tool.

DO Implement "Dry Runs": For sensitive actions (like deleting a database row), create a tool that allows the agent to preview the action before executing it.

DO Optimize for LLM Readability: When defining your tools in the MCP server, write the descriptions for the AI, not for other developers. Be descriptive.

Bad: func delete_user(id)

Good: func delete_user(id) - Deletes a user from the active database. Requires admin approval. Returns success status.

The DONTs (Red Flags) ❌

DON'T Expose Raw APIs: Never wrap your entire internal API blindly. Only expose the specific, safe functions the agent actually needs to do its job.

DON'T Forget Rate Limiting: Agents can be enthusiastic. If an agent gets into a loop, it might hit your internal API 1,000 times in a minute. Implement rate limits at the MCP server level.

DON'T Return "Not Found" Silently: If an agent searches for data and finds nothing, don't just return null. Return a descriptive message: "Search completed for term 'X' but no records were found. Try searching for a related term." This helps the agent self-correct.

DON'T Ignore Latency: LLMs are slow; don't make them wait longer. Ensure your MCP server tools execute quickly (under a few seconds) to prevent timeouts.

Conclusion: The First-Mover Advantage

The "Rise of MCP Servers" is akin to the early days of APIs. The companies that adopted API-first architectures moved faster and integrated better than their competitors. Today, adopting an MCP-first architecture prepares your infrastructure for a future where AI agents are the primary users of your software.

By standardizing how your data and tools speak to AI, you aren't just building better automations today; you are building a product that is ready for whatever the AI revolution throws at you tomorrow.